除了核心组件,还有一些推荐的 Add-ons:

| 组件名称 | 说明 |

|---|---|

| kube-dns | 负责为整个集群提供 DNS 服务 |

| Ingress Controller | 为服务提供外网入口 |

| Heapster | 提供资源监控 |

| Dashboard | 提供 GUI |

| Federation | 提供跨可用区的集群 |

| Fluentd-elasticsearch | 提供集群日志采集、存储与查询 |

cat >> /etc/hosts <<EOF

10.0.0.202 master

10.0.0.215 etcd

10.0.0.197 node1

10.0.0.163 node2

EOF

[root@etcd ~]# yum install etcd -y

vim /etc/etcd/etcd.conf

6行:ETCD_LISTEN_CLIENT_URLS="http://0.0.0.0:2379"

21行:ETCD_ADVERTISE_CLIENT_URLS="http://10.0.0.215:2379"

systemctl start etcd.service

systemctl enable etcd.service

[root@etcd ~]# etcdctl -C http://10.0.0.215:2379 cluster-health

member 8e9e05c52164694d is healthy: got healthy result from http://10.0.0.215:2379

cluster is healthy

yum install kubernetes-master.x86_64 -y

vim /etc/kubernetes/apiserver

8行: KUBE_API_ADDRESS="--insecure-bind-address=0.0.0.0"

11行:KUBE_API_PORT="--port=8080"

17行:KUBE_ETCD_SERVERS="--etcd-servers=http://10.0.0.215:2379"

23行:KUBE_ADMISSION_CONTROL="--admission-control=NamespaceLifecycle,NamespaceExists,LimitRanger,SecurityContextDeny,ResourceQuota"

vim /etc/kubernetes/config

22行:KUBE_MASTER="--master=http://10.0.0.202:8080"

systemctl enable kube-apiserver.service

systemctl restart kube-apiserver.service

systemctl enable kube-controller-manager.service

systemctl restart kube-controller-manager.service

systemctl enable kube-scheduler.service

systemctl restart kube-scheduler.service

检查服务是否正常

[root@master ~]# kubectl get componentstatus

NAME STATUS MESSAGE ERROR

scheduler Healthy ok

controller-manager Healthy ok

etcd-0 Healthy {"health":"true"}

yum install kubernetes-node.x86_64 -y

vim /etc/kubernetes/config

22行:KUBE_MASTER="--master=http://10.0.0.202:8080"

vim /etc/kubernetes/kubelet

5行:KUBELET_ADDRESS="--address=0.0.0.0"

8行:KUBELET_PORT="--port=10250"

11行:KUBELET_HOSTNAME="--hostname-override=10.0.0.163" #node节点ip

14行:KUBELET_API_SERVER="--api-servers=http://10.0.0.202:8080"

17行:KUBELET_POD_INFRA_CONTAINER="--pod-infra-container-image=10.0.0.202:5000/rhel7/pod-infrastructure:latest" #这里需要把pod-infrastructure镜像做到本地镜像仓库

systemctl enable kubelet.service

systemctl restart kubelet.service

systemctl enable kube-proxy.service

systemctl restart kube-proxy.service

在 master 节点检查

[root@master ~]# kubectl get node

NAME STATUS AGE

10.0.0.163 Ready 1d

10.0.0.197 Ready 1d

yum install flannel -y

sed -i 's#http://127.0.0.1:2379#http://10.0.0.215:2379#g' /etc/sysconfig/flanneld

##etcd节点:

etcdctl mk /atomic.io/network/config '{ "Network": "172.18.0.0/16" }'

##master节点

yum install docker -y

systemctl enable flanneld.service

systemctl restart flanneld.service

service docker restart

systemctl restart kube-apiserver.service

systemctl restart kube-controller-manager.service

systemctl restart kube-scheduler.service

##node节点:

systemctl enable flanneld.service

systemctl restart flanneld.service

service docker restart

systemctl restart kubelet.service

systemctl restart kube-proxy.service

vim /usr/lib/systemd/system/docker.service

#在[Service]区域下增加一行

ExecStartPost=/usr/sbin/iptables -P FORWARD ACCEPT

systemctl daemon-reload

systemctl restart docker

#所有节点

vim /etc/sysconfig/docker

OPTIONS='--selinux-enabled --log-driver=journald --signature-verification=false --registry-mirror=https://registry.docker-cn.com --insecure-registry=10.0.0.202:5000'

systemctl restart docker

#master节点(如有网络问题,多试几次)

docker run -d -p 5000:5000 --restart=always --name registry -v /opt/myregistry:/var/lib/registry registry

pod 是最小资源单位.

k8s YAML 的主要组成

apiVersion: v1 api版本

kind: pod 资源类型

metadata: 属性

spec: 详细

[root@master pod]# cat k8s_pod.yaml

apiVersion: v1

kind: Pod

metadata:

name: nginx

labels:

app: web

spec:

containers:

- name: nginx

image: 10.0.0.202:5000/nginx:latest

ports:

- containerPort: 80

基于 YAML 创建 pod:

[root@master pod]# kubectl create -f k8s_pod.yaml

pod "nginx1" created

[root@master pod]# kubectl get pod

NAME READY STATUS RESTARTS AGE

nginx 0/1 ContainerCreating 0 12s

此时发现 pod 创建没能成功,通过

kubectl describe pod nginx查看详细信息:

Events:

FirstSeen LastSeen Count From SubObjectPath Type Reason Message

--------- -------- ----- ---- ------------- -------- ------ -------

2m 2m 1 {default-scheduler } Normal Scheduled Successfully assigned nginx to 10.0.0.197

2m 43s 4 {kubelet 10.0.0.197} Warning FailedSync Error syncing pod, skipping: failed to "StartContainer" for "POD" with ErrImagePull: "image pull failed for registry.access.redhat.com/rhel7/pod-infrastructure:latest, this may be because there are no credentials on this request. details: (open /etc/docker/certs.d/registry.access.redhat.com/redhat-ca.crt: no such file or directory)"

1m 6s 6 {kubelet 10.0.0.197} Warning FailedSync Error syncing pod, skipping: failed to "StartContainer" for "POD" with ImagePullBackOff: "Back-off pulling image \"registry.access.redhat.com/rhel7/pod-infrastructure:latest\""

由上可见,需要本地镜像仓库需要 pod-infrastructure:latest 这个 pod 基础镜像,所以需要在拉取镜像

docker pull tianyebj/pod-infrastructure,并且 push 到本地镜像仓库

docker pull tianyebj/pod-infrastructure

docker tag docker.io/tianyebj/pod-infrastructure 10.0.0.202:5000/rhel7/pod-infrastructure:latest

docker push 10.0.0.202:5000/rhel7/pod-infrastructure:latest

#同时改下node节点: /etc/kubernetes/kubelet

#更改配置:KUBELET_POD_INFRA_CONTAINER="--pod-infra-container-image=10.0.0.202:5000/rhel7/pod-infrastructure:latest"

systemctl restart kubelet.service

在此查看 pod 创建过程:

Events:

FirstSeen LastSeen Count From SubObjectPath Type Reason Message

--------- -------- ----- ---- ------------- -------- ------ -------

14m 14m 1 {default-scheduler } Normal Scheduled Successfully assigned nginx to 10.0.0.197

13m 3m 7 {kubelet 10.0.0.197} Warning FailedSync Error syncing pod, skipping: failed to "StartContainer" for "POD" with ErrImagePull: "image pull failed for registry.access.redhat.com/rhel7/pod-infrastructure:latest, this may be because there are no credentials on this request. details: (open /etc/docker/certs.d/registry.access.redhat.com/redhat-ca.crt: no such file or directory)"

13m 58s 54 {kubelet 10.0.0.197} Warning FailedSync Error syncing pod, skipping: failed to "StartContainer" for "POD" with ImagePullBackOff: "Back-off pulling image \"registry.access.redhat.com/rhel7/pod-infrastructure:latest\""

45s 45s 1 {kubelet 10.0.0.197} spec.containers{nginx} Normal Pulling pulling image "10.0.0.202:5000/nginx:1.13"

46s 41s 2 {kubelet 10.0.0.197} Warning MissingClusterDNS kubelet does not have ClusterDNS IP configured and cannot create Pod using "ClusterFirst" policy. Falling back to DNSDefault policy.

41s 41s 1 {kubelet 10.0.0.197} spec.containers{nginx} Normal Pulled Successfully pulled image "10.0.0.202:5000/nginx:1.13"

41s 41s 1 {kubelet 10.0.0.197} spec.containers{nginx} Normal Created Created container with docker id 9254a49fc62c; Security:[seccomp=unconfined]

41s 41s 1 {kubelet 10.0.0.197} spec.containers{nginx} Normal Started Started container with docker id 9254a49fc62c

到此 pod 创建完成

在这里需要把 pod 容器 tag 改成需要用的:

[root@master pod]# docker tag docker.io/tianyebj/pod-infrastructure 10.0.0.202:5000/rhel7/pod-infrastructure:latest

[root@master pod]# docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

docker.io/registry latest f32a97de94e1 9 months ago 25.8 MB

docker.io/tianyebj/pod-infrastructure latest 34d3450d733b 2 years ago 205 MB

10.0.0.202:5000/rhel7/pod-infrastructure latest 34d3450d733b 2 years ago 205 MB

强制删除 pod 资源:

[root@master pod]# kubectl delete pod nginx --force --grace-period=0 -n default

warning: Immediate deletion does not wait for confirmation that the running resource has been terminated. The resource may continue to run on the cluster indefinitely.

pod "nginx" deleted

[root@master pod]# docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

docker.io/b3log/solo latest 4e49320bec76 2 days ago 152 MB

10.0.0.202:5000/b3log/solo latest 4e49320bec76 2 days ago 152 MB

docker.io/mysql 5.7 1e4405fe1ea9 2 weeks ago 437 MB

10.0.0.202:5000/nginx latest 231d40e811cd 2 weeks ago 126 MB

docker.io/nginx latest 231d40e811cd 2 weeks ago 126 MB

docker.io/registry latest f32a97de94e1 9 months ago 25.8 MB

10.0.0.202:5000/rhel7/pod-infrastructure latest 34d3450d733b 2 years ago 205 MB

docker.io/tianyebj/pod-infrastructure latest 34d3450d733b 2 years ago 205 MB

[root@master pod]# docker tag docker.io/nginx 10.0.0.202:5000/nginx:latest

[root@master pod]# docker push 10.0.0.202:5000/nginx

[root@master pod]# docker tag docker.io/mysql:5.7 10.0.0.202:5000/mysql:5.7

[root@master pod]# docker push 10.0.0.202:5000/mysql

[root@master pod]# kubectl create -f k8s_pod.yaml

pod "nginx" created

[root@master pod]# kubectl get pod

NAME READY STATUS RESTARTS AGE

nginx 1/1 Running 0 7s

[root@master pod]# cat k8s_pod2.yaml

apiVersion: v1

kind: Pod

metadata:

name: test

labels:

app: web

spec:

containers:

- name: nginx

image: 10.0.0.202:5000/nginx:latest

ports:

- containerPort: 80

- name: solo

image: 10.0.0.202:5000/b3log/solo:latest

command: ["sleep","10000"]

[root@master pod]# kubectl create -f k8s_pod2.yaml

pod "test" created

[root@master pod]# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE

nginx 1/1 Running 0 15h 172.18.57.2 10.0.0.197

test 2/2 Running 0 15s 172.18.39.2 10.0.0.163

docker inspect 3f6cdafa32f5

创建一个 pod 资源,才能实现 k8s 的高级功能.

pod 容器:基础架构容器

nginx 容器: 业务容器

[root@node1 ~]# docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

3f6cdafa32f5 10.0.0.202:5000/nginx:latest "nginx -g 'daemon ..." 17 hours ago Up 17 hours k8s_nginx.c74a033c_nginx_default_e5a7a5ea-1bf8-11ea-950d-fa163ef9cf10_5e72b386

d0198f64f479 10.0.0.202:5000/rhel7/pod-infrastructure:latest "/pod" 17 hours ago Up 17 hours k8s_POD.7b6a03f3_nginx_default_e5a7a5ea-1bf8-11ea-950d-fa163ef9cf10_7ee05d13

k8s 资源的常见操作:

kubectl create -f xxx.yaml

kubectl get pod|rc

kubectl describe pod nginx

kubectl delete pod nginx 或者 kubectl delete -f xxx.yaml

kubectl edit pod nginx

rc:保证指定数量的 pod 始终存活,rc 通过标签选择器来关联 pod

POD 资源被关闭也会及时启动自愈。

[root@node2 ~]# docker ps -a -q

f14a9650d408

5a4a70671124

[root@node2 ~]# docker rm -f `docker ps -a -q`

f14a9650d408

5a4a70671124

[root@node2 ~]# docker ps -a -q

7f4142ea7eb9

2ec639b32132

pod 资源被强制删除停止后,这些资源会立即重新生成并启动。

rc:保证指定数量的 pod 始终够存活,rc 通过标签选择器来关联 pod

[root@master rc]# cat k8s_rc.yaml

apiVersion: v1

kind: ReplicationController

metadata:

name: nginx

spec:

replicas: 5

selector:

app: myweb

template:

metadata:

labels:

app: myweb

spec:

containers:

- name: myweb

image: 10.0.0.202:5000/nginx:1.13

ports:

- containerPort: 80

[root@master rc]# pwd

/root/k8s/rc

[root@master rc]# kubectl create -f k8s_rc.yaml

replicationcontroller "nginx" created

查看状态:

[root@master rc]# kubectl get rc

NAME DESIRED CURRENT READY AGE

nginx 5 5 5 9m

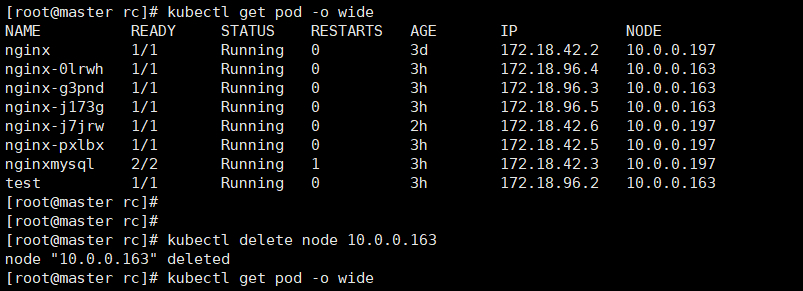

rc 作用:

当 node 节点上的 pod 资源在其中一个 node 挂掉之后,rc 会将挂掉的 node 上的 pod 资源驱逐到存活的 node 之上。

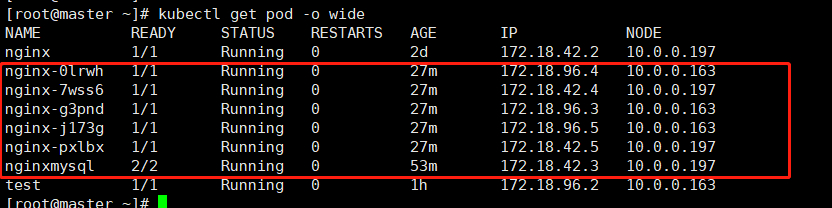

示例 1:

pod 资源删除后,会马上重新创建新的 pod。

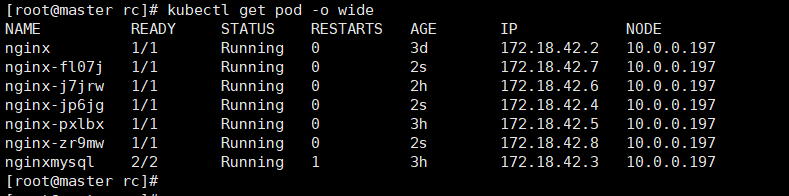

[root@master rc]# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE

nginx 1/1 Running 0 3d 172.18.42.2 10.0.0.197

nginx-0lrwh 1/1 Running 0 37m 172.18.96.4 10.0.0.163

nginx-7wss6 1/1 Running 0 37m 172.18.42.4 10.0.0.197

nginx-g3pnd 1/1 Running 0 37m 172.18.96.3 10.0.0.163

nginx-j173g 1/1 Running 0 37m 172.18.96.5 10.0.0.163

nginx-pxlbx 1/1 Running 0 37m 172.18.42.5 10.0.0.197

nginxmysql 2/2 Running 0 1h 172.18.42.3 10.0.0.197

test 1/1 Running 0 1h 172.18.96.2 10.0.0.163

[root@master rc]# kubectl delete pod nginx-7wss6

pod "nginx-7wss6" deleted

[root@master rc]# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE

nginx 1/1 Running 0 3d 172.18.42.2 10.0.0.197

nginx-0lrwh 1/1 Running 0 38m 172.18.96.4 10.0.0.163

nginx-g3pnd 1/1 Running 0 38m 172.18.96.3 10.0.0.163

nginx-j173g 1/1 Running 0 38m 172.18.96.5 10.0.0.163

nginx-j7jrw 1/1 Running 0 3s 172.18.42.6 10.0.0.197

nginx-pxlbx 1/1 Running 0 38m 172.18.42.5 10.0.0.197

nginxmysql 2/2 Running 0 1h 172.18.42.3 10.0.0.197

test 1/1 Running 0 1h 172.18.96.2 10.0.0.163

[root@master rc]# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE

nginx 1/1 Running 0 3d 172.18.42.2 10.0.0.197

nginx-0lrwh 1/1 Running 0 3h 172.18.96.4 10.0.0.163

nginx-g3pnd 1/1 Running 0 3h 172.18.96.3 10.0.0.163

nginx-j173g 1/1 Running 0 3h 172.18.96.5 10.0.0.163

nginx-j7jrw 1/1 Running 0 2h 172.18.42.6 10.0.0.197

nginx-pxlbx 1/1 Running 0 3h 172.18.42.5 10.0.0.197

nginxmysql 2/2 Running 1 3h 172.18.42.3 10.0.0.197

test 1/1 Running 0 3h 172.18.96.2 10.0.0.163

[root@master rc]# kubectl delete node 10.0.0.163

node "10.0.0.163" deleted

[root@master rc]# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE

nginx 1/1 Running 0 3d 172.18.42.2 10.0.0.197

nginx-fl07j 1/1 Running 0 2s 172.18.42.7 10.0.0.197

nginx-j7jrw 1/1 Running 0 2h 172.18.42.6 10.0.0.197

nginx-jp6jg 1/1 Running 0 2s 172.18.42.4 10.0.0.197

nginx-pxlbx 1/1 Running 0 3h 172.18.42.5 10.0.0.197

nginx-zr9mw 1/1 Running 0 2s 172.18.42.8 10.0.0.197

nginxmysql 2/2 Running 1 3h 172.18.42.3 10.0.0.197

node2 的 kubelet 重启后会自动注册到集群

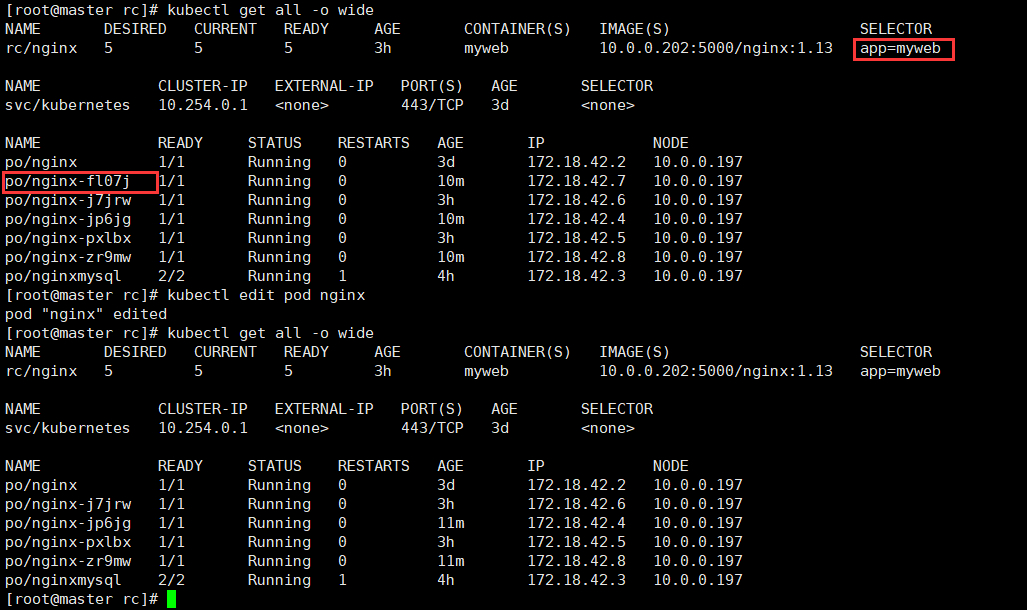

通过更改 [root@master rc]# kubectl edit pod nginx 同一标签为 myweb 后,默认会把 pod 存活年龄最小的剔除

所以 rc 的 pod 资源是有标签选择器来进行维护。

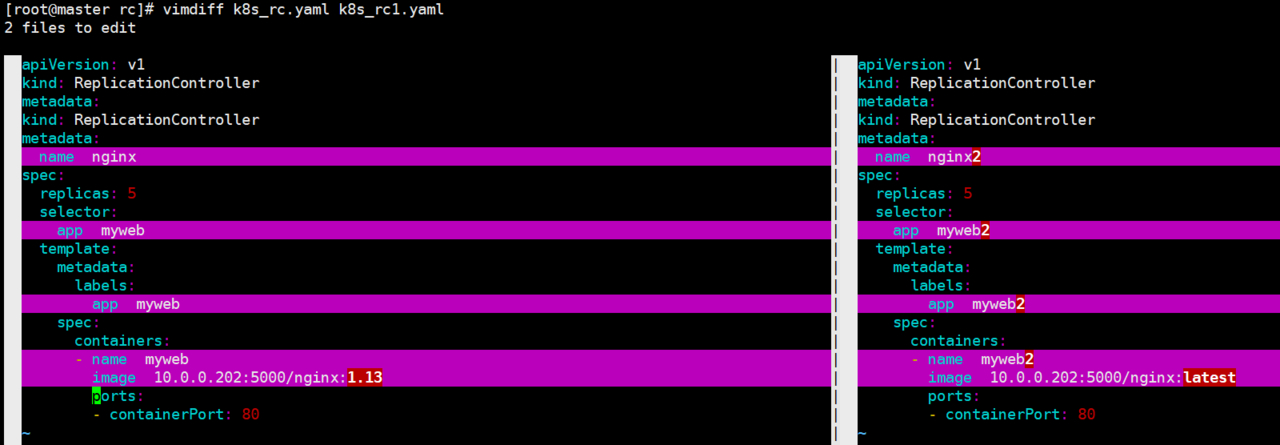

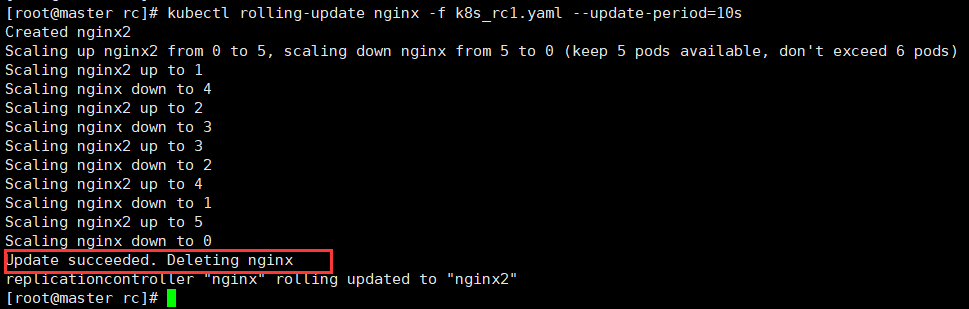

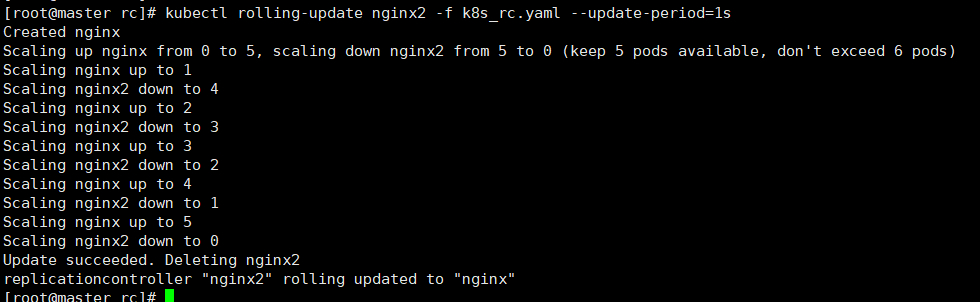

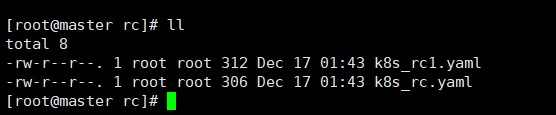

[root@master rc]# kubectl rolling-update nginx -f k8s_rc1.yaml --update-period=10s

--update-period=10s:升级间隔 10s

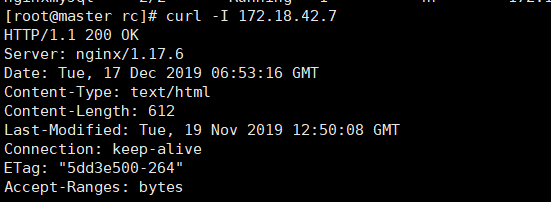

找一个 pod 容器进行 nginx 版本测试

升级成功

[root@master rc]# kubectl rolling-update nginx2 -f k8s_rc.yaml --update-period=1s

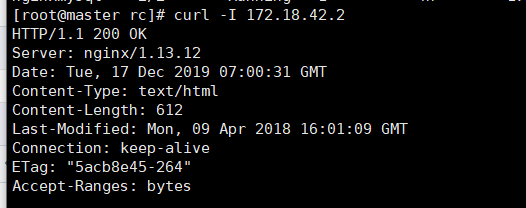

版本测试:

最后总结一下 RC(Replica Set)的一些特性与作用。

◎ 在大多数情况下,我们通过定义一个 RC 实现 Pod 的创建及副本数量的自动控制。

◎ 在 RC 里包括完整的 Pod 定义模板。

◎ RC 通过 Label Selector 机制实现对 Pod 副本的自动控制。

◎ 通过改变 RC 里的 Pod 副本数量,可以实现 Pod 的扩容或缩容。

◎ 通过改变 RC 里 Pod 模板中的镜像版本,可以实现 Pod 的滚动升级。

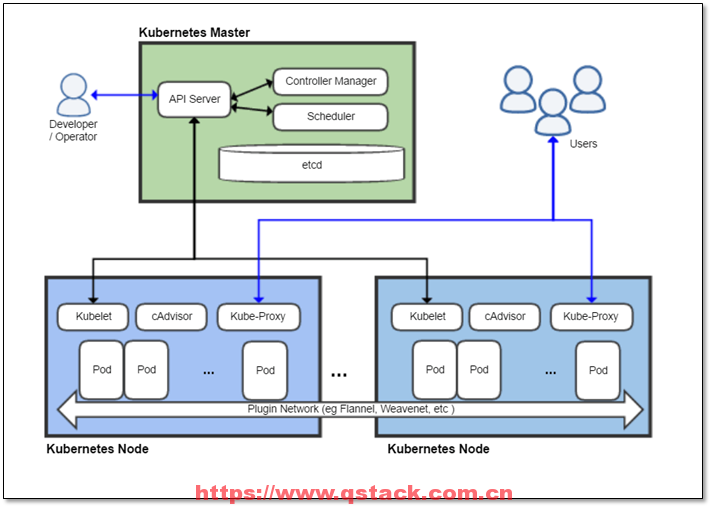

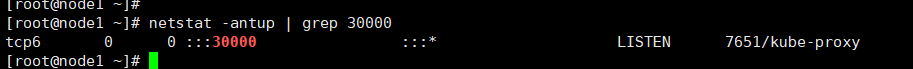

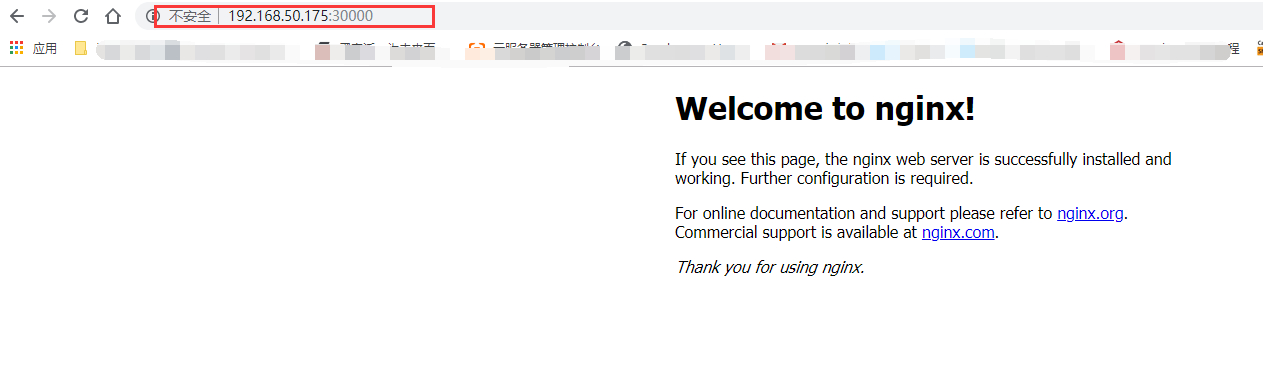

service 帮助 pod 暴露端口,通过架构图看出通过任何服务可通过 kube-proxy 组件进行通信。

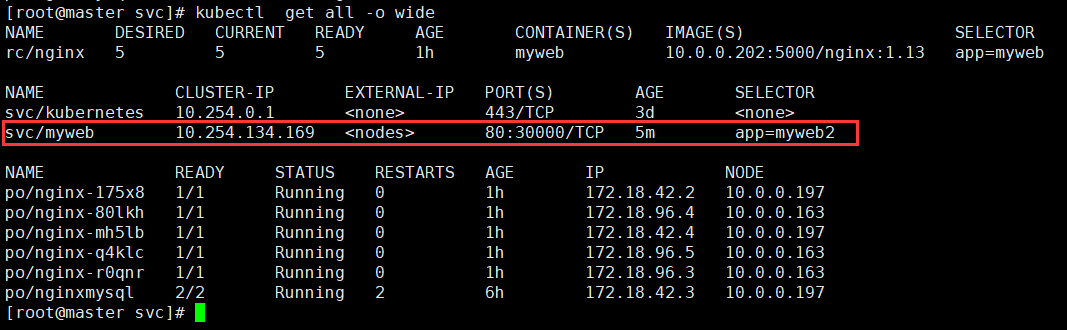

[root@master svc]# cat k8s_svc.yaml

apiVersion: v1

kind: Service

metadata:

name: myweb

spec:

type: NodePort #ClusterIP

ports:

- port: 80 #clusterIP

nodePort: 30000 #node port

targetPort: 80 #pod port

selector:

app: myweb2

[root@master svc]# kubectl create -f k8s_svc.yaml

注意:

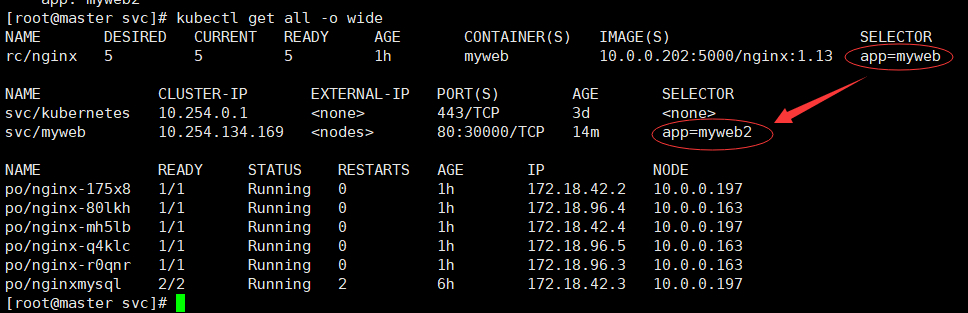

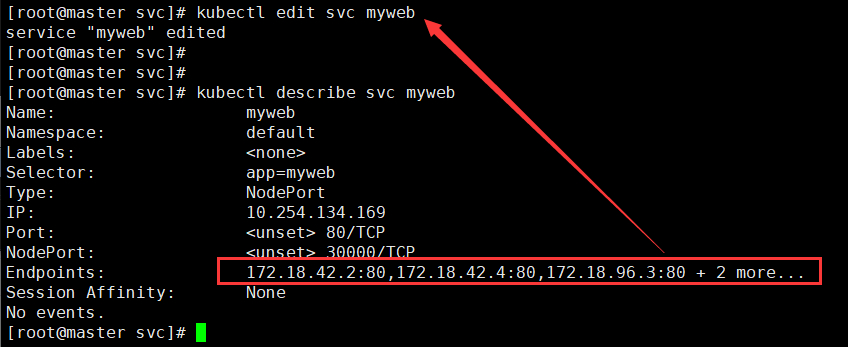

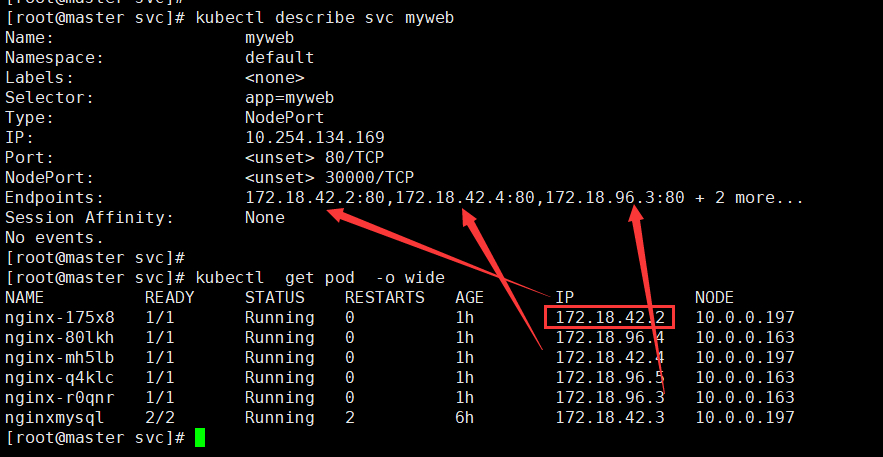

svc 标签选择器和 rc 的标签需要一致,不然访问不了后端服务。

通过:[root@master svc]# kubectl edit svc myweb 修改标签和 rc 标签一致。

[root@master svc]# kubectl scale rc nginx --replicas=2

调整副本数量的命令

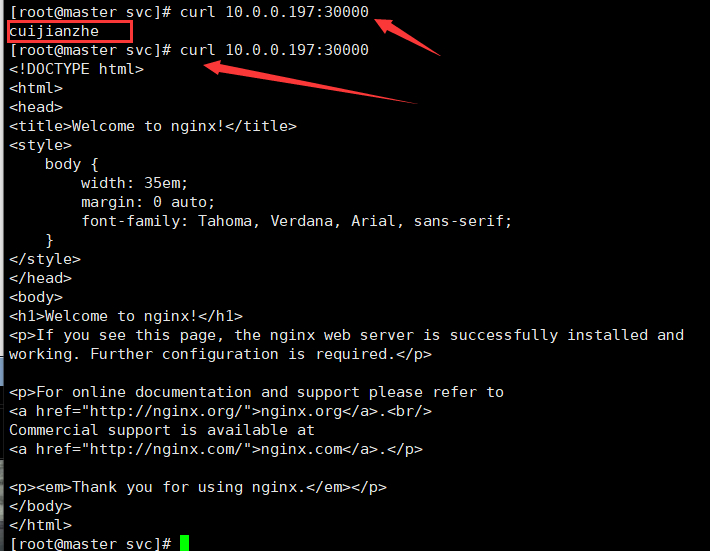

[root@master svc]# kubectl exec -it nginx-175x8 /bin/bash

root@nginx-175x8:/# cd /usr/share/nginx/html/

root@nginx-175x8:/usr/share/nginx/html# ls

50x.html index.html

root@nginx-175x8:/usr/share/nginx/html# echo 'cuijianzhe' > index.html

测试:

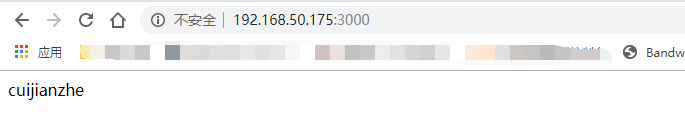

[root@master svc]# vim /etc/kubernetes/apiserver

KUBE_API_ARGS="--service-node-port-range=3000-50000"

[root@master svc]# systemctl restart kube-apiserver

[root@master svc]# cat k8s_svc2.yaml

apiVersion: v1

kind: Service

metadata:

name: myweb2

spec:

type: NodePort #ClusterIP

ports:

- port: 80 #clusterIP

nodePort: 3000 #node port

targetPort: 80 #pod port

selector:

app: myweb2

修改 svc 统一标签 myweb

kubectl edit svc myweb

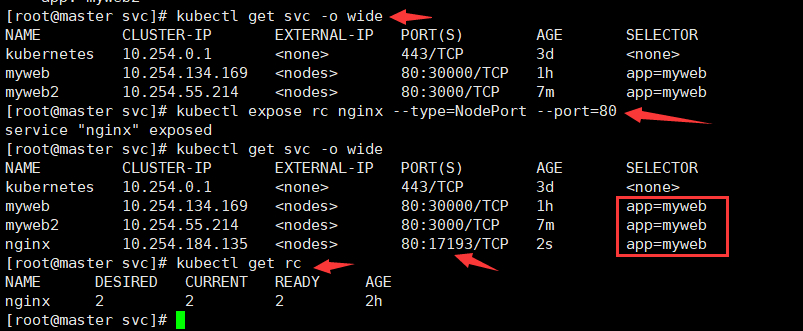

[root@master svc]# kubectl get svc -o wide

NAME CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

kubernetes 10.254.0.1 <none> 443/TCP 3d <none>

myweb 10.254.134.169 <nodes> 80:30000/TCP 55m app=myweb

myweb2 10.254.55.214 <nodes> 80:3000/TCP 2m app=myweb

kubectl scale rc nginx --replicas=2

kubectl exec -it pod_name /bin/bash

kubectl expose rc nginx --type=NodePort --port=80

有 rc 在滚动升级之后,会造成服务访问中断,于是 k8s 引入了 deployment 资源

[root@master deploy]# cat k8s_deploy.yaml

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

name: nginx-deployment

spec:

replicas: 3

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: 10.0.0.202:5000/nginx:1.13

ports:

- containerPort: 80

resources:

limits:

cpu: 100m

requests:

cpu: 100m

[root@master deploy]# kubectl create -f k8s_deploy.yaml

deployment "nginx-deployment" created

[root@master deploy]# kubectl get deploy

NAME DESIRED CURRENT UP-TO-DATE AVAILABLE AGE

nginx-deployment 3 3 3 3 18s

给 deployment 分配端口,出现一个新的 svc:

[root@master deploy]# kubectl expose deployment nginx-deployment --type=NodePort --port=80

service "nginx-deployment" exposed

[root@master deploy]# kubectl get svc

NAME CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes 10.254.0.1 <none> 443/TCP 3d

myweb 10.254.134.169 <nodes> 80:30000/TCP 17h

myweb2 10.254.55.214 <nodes> 80:3000/TCP 16h

nginx-deployment 10.254.2.186 <nodes> 80:33604/TCP 13s

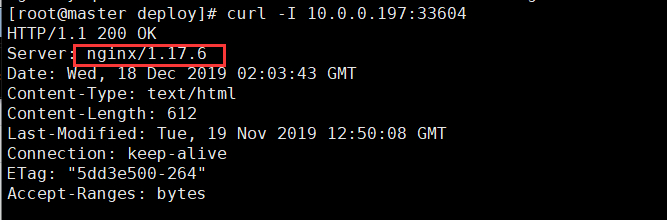

通过 curl 测试:

更改 [root@master deploy]# kubectl edit deployment nginx-deployment 配置文件

[root@master deploy]# kubectl edit deployment nginx-deployment

#将 - image: 10.0.0.202:5000/nginx:1.13 改为

- image: 10.0.0.202:5000/nginx:latest

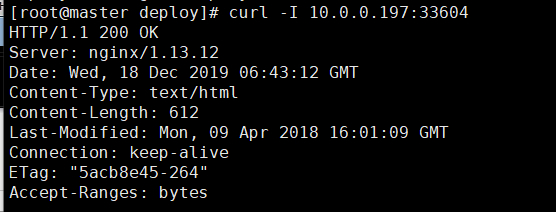

curl 测试升级完成:

[root@master deploy]# kubectl edit deployment nginx-deployment

...

strategy:

rollingUpdate:

maxSurge: 1

maxUnavailable: 1

type: RollingUpdate

...

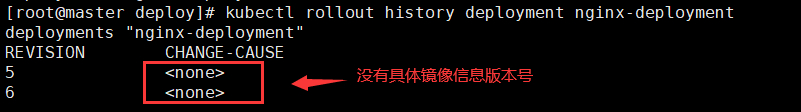

[root@master deploy]# kubectl rollout history deployment nginx-deployment

deployments "nginx-deployment"

REVISION CHANGE-CAUSE

2 <none>

3 <none>

[root@master deploy]# kubectl rollout undo deployment nginx-deployment

deployment "nginx-deployment" rolled back

[root@master deploy]# kubectl rollout history deployment nginx-deployment

deployments "nginx-deployment"

REVISION CHANGE-CAUSE

3 <none>

4 <none>

回滚之后版本:

指定回滚版本号:

[root@master deploy]# kubectl rollout undo deployment nginx-deployment --to-revision=4

deployment "nginx-deployment" rolled back

[root@master deploy]# kubectl delete deployment nginx-deployment

deployment "nginx-deployment" deleted

[root@master deploy]# kubectl rollout history deployment nginx

deployments "nginx"

REVISION CHANGE-CAUSE

1 kubectl run nginx --image=10.0.0.202:5000/nginx:1.13 --replicas=3 --record

升级版本

[root@master deploy]# kubectl set image deployment nginx nginx=10.0.0.202:5000/nginx:1.16

deployment "nginx" image updated

[root@master deploy]# kubectl rollout history deployment nginx

deployments "nginx"

REVISION CHANGE-CAUSE

1 kubectl run nginx --image=10.0.0.202:5000/nginx:1.13 --replicas=3 --record

2 kubectl edit deployment nginx

3 kubectl set image deployment nginx nginx=10.0.0.202:5000/nginx:1.16

[root@master deploy]# kubectl set image deployment nginx nginx=10.0.0.202:5000/nginx:latest

deployment "nginx" image updated

[root@master deploy]# kubectl rollout history deployment nginx

deployments "nginx"

REVISION CHANGE-CAUSE

1 kubectl run nginx --image=10.0.0.202:5000/nginx:1.13 --replicas=3 --record

3 kubectl set image deployment nginx nginx=10.0.0.202:5000/nginx:1.16

4 kubectl set image deployment nginx nginx=10.0.0.202:5000/nginx:latest

deployment 升级和回滚

命令行创建 deployment

kubectl run nginx --image=10.0.0.202:5000/nginx:1.13 --replicas=3 --record

命令行升级版本

kubectl set image deploy nginx nginx=10.0.0.202:5000/nginx:1.15

查看 deployment 所有历史版本

kubectl rollout history deployment nginx

deployment 回滚到上一个版本

kubectl rollout undo deployment nginx

deployment 回滚到指定版本

kubectl rollout undo deployment nginx --to-revision=2

在 K8S 容器之间相互访问,通过 VIP 地址!

[root@master tomcat_demo]# cat mysql-rc.yaml

apiVersion: v1

kind: ReplicationController

metadata:

name: mysql

spec:

replicas: 1

selector:

app: mysql

template:

metadata:

labels:

app: mysql

spec:

containers:

- name: mysql

image: 10.0.0.202:5000/mysql:5.7

ports:

- containerPort: 3306

env:

- name: MYSQL_ROOT_PASSWORD

value: '598941324'

[root@master tomcat_demo]# kubectl create -f mysql-rc.yaml

replicationcontroller "mysql" created

2.创建 Mysql-svc YAML 配置文件,创建 Service 资源

[root@master tomcat_demo]# cat mysql-svc.yaml

apiVersion: v1

kind: Service

metadata:

name: mysql

spec:

# type: NodePort #ClusterIP

ports:

- port: 3306

targetPort: 3306 #pod port

selector:

app: mysql

[root@master tomcat_demo]# kubectl create -f mysql-svc.yaml

service "mysql" created

[root@master tomcat_demo]# kubectl get svc

NAME CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes 10.254.0.1 <none> 443/TCP 4d

mysql 10.254.76.200 <none> 3306/TCP 1m

myweb 10.254.134.169 <nodes> 80:30000/TCP 1d

myweb2 10.254.55.214 <nodes> 80:3000/TCP 1d

nginx-deployment 10.254.2.186 <nodes> 80:33604/TCP 1d

[root@master tomcat_demo]# cat tomcat-rc.yaml

apiVersion: v1

kind: ReplicationController

metadata:

name: tomcat

spec:

replicas: 1

selector:

app: tomcat

template:

metadata:

labels:

app: tomcat

spec:

containers:

- name: tomcat

image: 10.0.0.202:5000/tomcat:latest

ports:

- containerPort: 8080

env:

- name: MYSQL_SERVICE_HOST

value: '10.254.76.200' #此处是上面查看svc的MySQL CLUSTER-IP

- name: MYSQL_SERVICE_PORT

value: '3306'

[root@master tomcat_demo]# cat tomcat-svc.yaml

apiVersion: v1

kind: Service

metadata:

name: tomcat

spec:

type: NodePort #ClusterIP

ports:

- port: 8080 #clusterIP

nodePort: 30008 #node port

targetPort: 8080 #pod port

selector:

app: tomcat

[root@master tomcat_demo]# kubectl create -f tomcat-svc.yaml

service "tomcat" created

测试 Tomcat 服务: